Every spring, British students take their A-level exams, which are used to determine admission into college.

But this year was different. With the Covid-19 pandemic still raging, spring’s A-levels were canceled. Instead, the government took an unorthodox — and controversial — approach to assessing admissions without those exam scores: It tried to use a mathematical rule to predict how the students would have done on their exams and then use those estimates as a stand-in for actual scores.

The approach the government took was fairly simple. It wanted to guess how well a student would have done if they had taken the exam. It used two inputs: the student’s grades this year and the historical track record of the school the student was attending.

So a student who got excellent grades at a school where top students usually get good scores would be predicted to have achieved a good score. A student who got excellent grades at a school where excellent grades historically haven’t translated to top-tier scores on the A-levels would instead be predicted to get a lower score.

The overall result? There were more top scores than are awarded in any year when students actually get to take the exam.

But many individual students and teachers were still angry with scores that they felt were too low. Even worse, the adjustment for how well a school was “expected” to perform ended up being strongly correlated with how rich those schools are. Rich kids tend to do better on A-levels, so the prediction process awarded kids at rich schools higher grades.

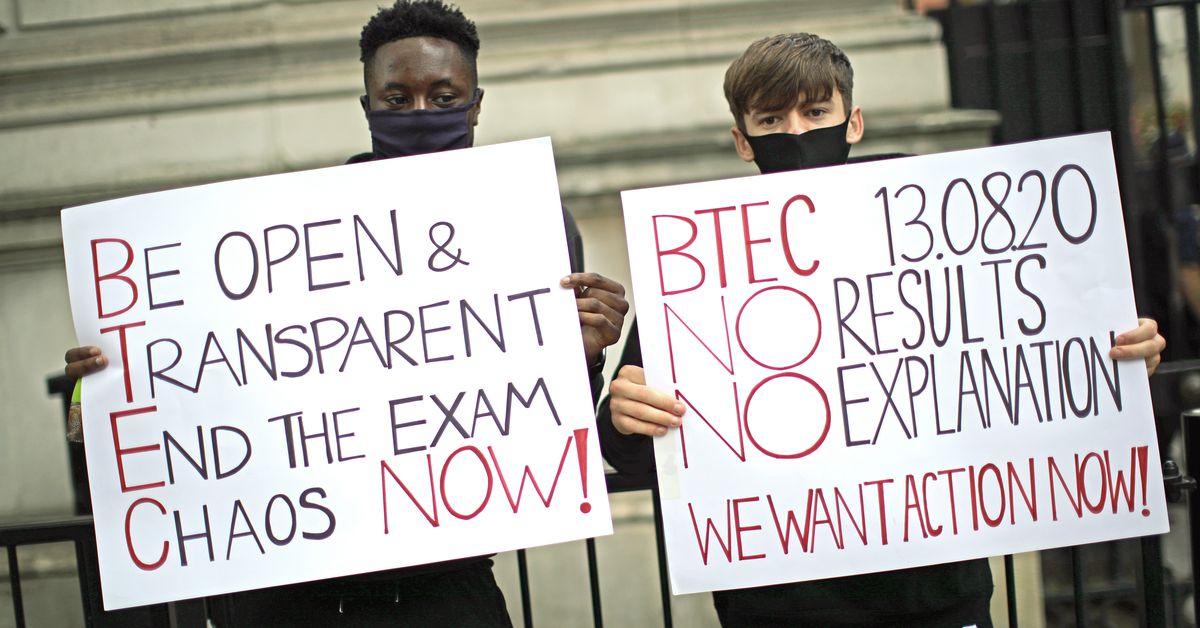

The predictive process and its outcome set off alarm bells. One Guardian columnist called it “shockingly unfair.” Legal action was threatened. After a weekend of angry demonstrations where students, teachers, and parents chanted, “Fuck the algorithm,” Britain backed off and announced that it will give students whatever grade their teachers estimated they would get if it’s higher than the exam score estimates.

What’s playing out in Britain is a bunch of different things at once: a drama brought on by the Covid-19 pandemic exacerbated by bad administrative decision-making against the backdrop of class tensions. It’s also an illustration of a fascinating dilemma often discussed as “AI bias” or “AI ethics” — even though it has almost nothing to do with AI. And it raises important questions about the kind of biases that get our attention and those that for whatever reason largely escape our scrutiny.

Imagine a world where rich children and poor children are just as likely to do drugs, but poor children are five times more likely to be arrested. Every time someone is arrested, a prediction system tries to predict whether they will re-offend — that is, whether someone like that person who is arrested for drugs will likely be arrested for drugs again within a year. If they are likely to re-offend, they get a harsher sentence. If they are unlikely to re-offend, they are released with probation.

Since rich children are less likely to be arrested, the system will correctly predict that they are less likely to be re-arrested. It will declare them unlikely to re-offend and recommend a lighter sentence. The poor children are much more likely to be re-arrested, so the system tags them as likely re-offenders and recommends a harsh sentence.

This is grossly unfair. There is no underlying difference at all in the tendency to do drugs, but the system has disparities at one stage and then magnifies the disparities at the next stage by using them to make criminal judgments.

“The algorithm shouldn’t be predicting re-jailing; it should be predicting re-offending. But the only proxy variable we have for offending is jailing, so it ends up double-counting anti-minority judges and police,” Leor Fishman, a data scientist who studies data privacy and algorithmic fairness, told me.

If the prediction system is an AI trained on a large dataset to predict criminal recidivism, this problem gets discussed as “AI bias.” But it’s easy to see that the AI is not actually a crucial component of the problem. If the decision is made by a human judge, going off their own intuitions about recidivism from their years of criminal justice experience, it is just as unfair.

Some writers have pointed to the UK’s school decisions as an example of AI bias. But it’s actually a stretch to call the UK’s Office of Qualifications and Examinations Regulation’s approach here — taking student grades and adjusting for the school’s past year’s performance — an “artificial intelligence”: it was a simple math formula for combining a few data points.

Rather, the larger category here is perhaps better called “prediction bias” — cases where, when predicting some variable, we are going to end up with predictions that are disturbingly unequal. Often they’ll be deeply influenced by factors like race, wealth, and national origin that anti-discrimination laws broadly prohibit taking into account and that it is deeply unfair to hold against people.

AIs are just one tool we use to make predictions, and while their failings are often particularly legible and maddening, they are not the only system that fails in this way. It makes national news when a husband and wife with the same income and debt history apply for a credit card and get offered wildly different credit limits thanks to an algorithm. It probably won’t be noticed when the same thing happens but the decision was made by a local banker not relying on complex algorithms.

In criminal justice, in particular, algorithms trained on recidivism data make sentencing recommendations with racial disparities — for instance, unjustly calling to imprison Black men for longer jail time than white men. But when not using algorithms, judges make these decisions off sentencing guidelines and personal intuition — and that produces racial disparities, too.

It’s important not to use unjust systems to determine access to opportunity. If we do that, we end up punishing people for having been punished in the past, and we etch societal inequalities deeper in stone. But it’s worth thinking about why a system that predicts poor children will do worse on exams generated so much more rage than the regular system that just administers exams — which, year after year, poor children do worse on.

For something like school exam scores, there aren’t disparities just in predicted outcomes but in real outcomes too: Rich children generally score better on exams for many reasons, from better schools to better tutors to more time to study.

If the exam had actually happened, there would be widespread disparities between the scores of rich kids and poor kids. This might anger some people, but it likely wouldn’t have led to the widespread fury that similar disparities in the predicted scores produced. Somehow, we’re more comfortable with disparities when they show up in actual measured test data than when they show up in our predictions about that measured test data. The cost to students’ lives in each instance is the same.

The UK effectively admitted, with their test score adjustments, that many children in the UK attended schools where it was very implausible they would get good exam scores — so implausible that even the fact they got excellent grades throughout school wasn’t enough for the government to expect they’d learned everything they needed for a top score. The government may have backed down now and awarded them that top score anyway, but the underlying problems with the schools remain.

We should get serious about addressing disparities when they show up in real life, not just when they show up in predictions, or we’re pointing our outrage at the wrong place.

New goal: 25,000

In the spring, we launched a program asking readers for financial contributions to help keep Vox free for everyone, and last week, we set a goal of reaching 20,000 contributors. Well, you helped us blow past that. Today, we are extending that goal to 25,000. Millions turn to Vox each month to understand an increasingly chaotic world — from what is happening with the USPS to the coronavirus crisis to what is, quite possibly, the most consequential presidential election of our lifetimes. Even when the economy and the news advertising market recovers, your support will be a critical part of sustaining our resource-intensive work — and helping everyone make sense of an increasingly chaotic world. Contribute today from as little as $3.

"used" - Google News

August 22, 2020 at 06:30PM

https://ift.tt/3gmgI83

The UK used a formula to predict students’ scores for canceled exams. Guess who did well. - Vox.com

"used" - Google News

https://ift.tt/2ypoNIZ

https://ift.tt/3aVpWFD

Bagikan Berita Ini

0 Response to "The UK used a formula to predict students’ scores for canceled exams. Guess who did well. - Vox.com"

Post a Comment