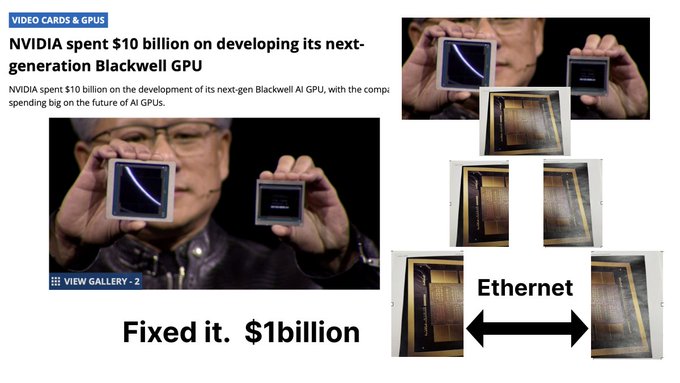

As a strong supporter of open standards, Jim Keller tweeted that Nvidia should have used the Ethernet protocol chip-to-chip connectivity in Blackwell-based GB200 GPUs for AI and HPC. Keller contends this could have saved Nvidia and users of its hardware a lot of money. It would have also made it a bit easier for those customers to migrate their software to different hardware platforms, which Nvidia doesn't necessarily want.

When Nvidia introduced its GB200 GPU for AI and HPC applications, the company primarily focused on its AI performance and advanced memory subsystem, telling little about how the device was made. Meanwhile, Nvidia's GB200 GPU comprises two compute processors stitched together using TSMC's CoWoS-L packaging technology and the NVLink interconnection technology, which uses a proprietary protocol. This isn't an issue for those who already use Nvidia's hardware and software, but this poses a challenge for the industry in porting software from Nvidia's platforms.

There is a reason why Jim Keller, a legendary CPU designer and chief executive officer of Tenstorrent, an Nvidia rival, suggests that Nvidia should have used Ethernet instead of proprietary NVLink. Nvidia's platforms use proprietary low-latency NVLink for chip-to-chip and server-to-server communications (which compete against PCIe with the CXL protocol on top) and proprietary InfiniBand connections for higher-tier comms. To maximize performance, the software is tuned for both technologies' peculiarities. For obvious reasons, this could somewhat complicate software porting to other hardware platforms, which is good for Nvidia and not exactly suitable for its competitors. (You can see his thread if you expand the tweet below.)

There is a catch, though. Ethernet is a ubiquitous technology both on the hardware and software level, and it is a competitor to Nvidia's low-latency and high-bandwidth (up to 200 GbE) InfiniBand interconnection for data centers. Performance-wise, Ethernet (particularly next-generation 400 GbE and 800 GbE) can compete with InfiniBand.

However, InfiniBand still has some advantages regarding features for AI and HPC and superior tail latencies, so some might say that Ethernet's capabilities don't cater to emerging AI and HPC workloads. Meanwhile, the industry — spearheaded by AMD, Broadcom, Intel, Meta, Microsoft, and Oracle — is developing the Ultra Ethernet interconnection technology, poised to offer higher throughput and features for AI and HPC communications. Of course, Ultra Ethernet will become a more viable competitor to Nvidia's InfiniBand for these sorts of workloads.

Nvidia also faces challenges with its CUDA software platform dominance, hence the advent of the widely industry-supported Unified Accelerator Foundation (UXL), an industry consortium that includes Arm, Intel, Qualcomm, and Samsung, among others, that's intended to provide an alternative to CUDA.

Of course, Nvidia needs to develop data center platforms to use here and now, which is probably at least part of its desire to spend billions on proprietary technologies. If open-standard technologies like PCIe with CXL and Ultra Ethernet will outpace Nvidia's proprietary NVLink and InfiniBand technologies regarding performance and capabilities, Nvidia will have to redevelop its platforms, so Keller advises (or trolls) that Nvidia should adopt Ethernet. However, this may be years away, so for now, Nvidia's designs continue to leverage proprietary interconnects.

"used" - Google News

April 13, 2024 at 08:56PM

https://ift.tt/qPO8kBW

Jim Keller suggests Nvidia should have used Ethernet to stitch together Blackwell GPUs — Nvidia could have saved ... - Tom's Hardware

"used" - Google News

https://ift.tt/OPM3bDq

https://ift.tt/56AyX4J

Bagikan Berita Ini

0 Response to "Jim Keller suggests Nvidia should have used Ethernet to stitch together Blackwell GPUs — Nvidia could have saved ... - Tom's Hardware"

Post a Comment